Two hundred and twenty-three people raised their hand in December. They’d been researching fiberglass pool kits for weeks—comparing shell sizes, reading installation guides, running backyard measurements on a Saturday morning. They finally filled out a contact form. And then they waited. A day. Three days. A full week. By the time someone called, most had already talked to a competitor. Some had already signed.

Those 223 leads weren’t lost because the ads were wrong. They were lost because nobody picked up the phone.

The month the marketing worked too well

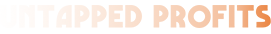

Here’s the situation: a client running Google Ads for fiberglass pool kits had a strong November. Around 750 leads, 29 deals created, mean response time of 1.2 days. Not perfect, but the machine was working. Marketing sent leads, sales worked them, deposits came in.

Then December hit. Search volume climbed. The ad campaigns—unchanged from November—delivered 900+ leads. High-intent queries for pool kits and fiberglass shells actually converted better than the month before, jumping from 7.1% to 7.7%. Wrong-product traffic sat at 0.3%. By every marketing metric, December was the best month of the year.

The pipeline told a different story. Deal creation dropped 48%—from 29 to 15. The time between sending a quote and collecting a deposit nearly tripled, stretching from 9 days to 27. And the number that still sits with me: mean response time went from 1.2 days to 4.3 days.

More leads came in than the team could work. Response times slipped. Fewer leads made it into the pipeline. It wasn’t a quality problem. It was a capacity problem wearing a quality mask.

Finding the fracture in two hours

The client’s first instinct was reasonable: the ads must be attracting the wrong people. December traffic feels different—tire-kickers, holiday browsers, people killing time. That’s the assumption most operators would make.

So I ran the diagnostic. I pulled the Google Ads search term data and the full HubSpot lead pipeline into Claude and asked it to do two things: categorise every search query by intent level, and map the entire lead-to-deposit journey for both months.

Two hours. That’s how long the whole analysis took—search intent classification, response time distributions, stage-by-stage funnel comparison. What came back was unambiguous.

The search intent profile between November and December was nearly identical. High-intent queries hadn’t dropped. Conversion rates on those queries had actually improved. The traffic wasn’t the problem.

The problem was downstream. In November, only 2% of leads waited more than seven days for first contact. In December, that number was 24%. Nearly a quarter of all inbound leads sat untouched for a week or longer. You know what a lead is worth after seven days of silence? About the same as a business card left in a jacket pocket.

This is a pattern Bain flagged in their 2025 Technology Report: sales teams consistently trail other functions in adopting AI, and the cost shows up exactly like this. Not as a dramatic failure, but as a slow bleed—response times stretching, deals stalling, pipeline velocity decaying while marketing metrics look fine. The report found that early AI adoption in sales produced 30% or better improvement in win rates, but only when teams reimagined their processes rather than just automating existing ones.

That distinction matters. Because the answer to “we have too many leads” is almost never “hire more reps.” At least, it shouldn’t be the first answer.

The three places leads go to die

Once you stop blaming the traffic, the actual failure points become obvious. There are three, and they compound each other.

The first contact gap. Research shows that 78% of customers buy from the business that responds first—not the cheapest, not the best, the first. The probability of conversion drops dramatically after the first hour, with one study finding a 21x decrease after just 30 minutes. And the average business? Takes 47 hours to respond. In December, our client’s mean response time was 4.3 days—not far off that brutal average, and a lifetime in a market where competitors are a single Google search away. The sales team wasn’t slow because they were lazy. They were slow because there were 900 leads and not enough hands.

The post-quote vacuum. A prospect who receives a quote is in the most fragile stage of the buying process. They’re comparing. They’re second-guessing. They’re Googling “fiberglass pool problems” at 11 PM. In November, the team moved quotes to deposits in 9 days. In December, that window ballooned to 27 days. During those 18 extra days, competitors weren’t sitting still.

The invisible triage problem. When volume exceeds capacity, reps start making silent prioritisation decisions. They cherry-pick the leads that feel warmest or most familiar. They push the “maybe” prospects to tomorrow. Tomorrow becomes next week. The leads that needed one more touch disappear into the CRM’s grey zone—not closed-lost, just abandoned.

You’ve seen this. Maybe not with pool kits, but with whatever your team sells. A month where everything looks good on the dashboard until someone asks, “So where are the deals?”

"But you can't automate a $50,000 sale"

This is the objection, and it’s a fair one. These aren’t $29/month SaaS subscriptions. A fiberglass pool kit is a major purchase—often the biggest home improvement project a family will take on. The sales process involves trust, technical consultation, site-specific advice. You can’t hand that to a chatbot.

And nobody should.

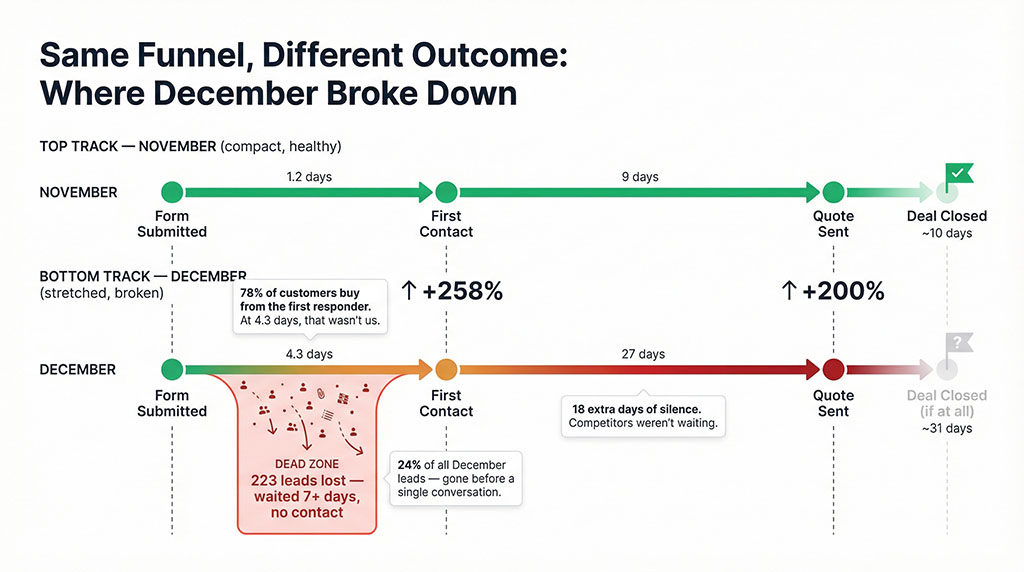

The misunderstanding about AI in sales is that it replaces the conversation. It doesn’t. It replaces the silence.

Think about where the December pipeline actually broke. It didn’t break during the consultation call. It didn’t break during the quote walkthrough. It broke in two specific windows: the hours between a form submission and first human contact, and the days between a sent quote and a signed deposit. Both are gaps where the prospect hears nothing—and in that nothing, doubt grows.

An AI agent that sends a personalised acknowledgment within minutes of a form submission, referencing the specific pages the prospect visited? That’s not replacing the salesperson. That’s holding the door open until the salesperson can walk through it.

And you don’t have to go all-in on day one. Most conversational AI platforms—including tools like HighLevel and HubSpot’s Breeze—offer a suggestive mode where the AI drafts responses for a human to review, edit, and send. Your rep still controls every message. The AI just eliminates the blank-page problem of figuring out what to say to 90 different leads. Once you trust the output, you graduate to full autopilot for the initial contact window. It’s a crawl-walk-run transition, not a cliff edge.

A structured follow-up sequence that triggers the moment a quote is sent—addressing common concerns about installation timelines, sharing case studies from similar backyards, nudging toward a booking link? That’s not automating the sale. That’s preventing the silence that kills it.

Bain’s research backs this up: the companies seeing 30%+ improvement in win rates aren’t the ones that automated their sales calls. They’re the ones that used AI to free sellers for the interactions that actually require human judgment—and eliminated the dead air everywhere else.

Reducing the load before adding headcount

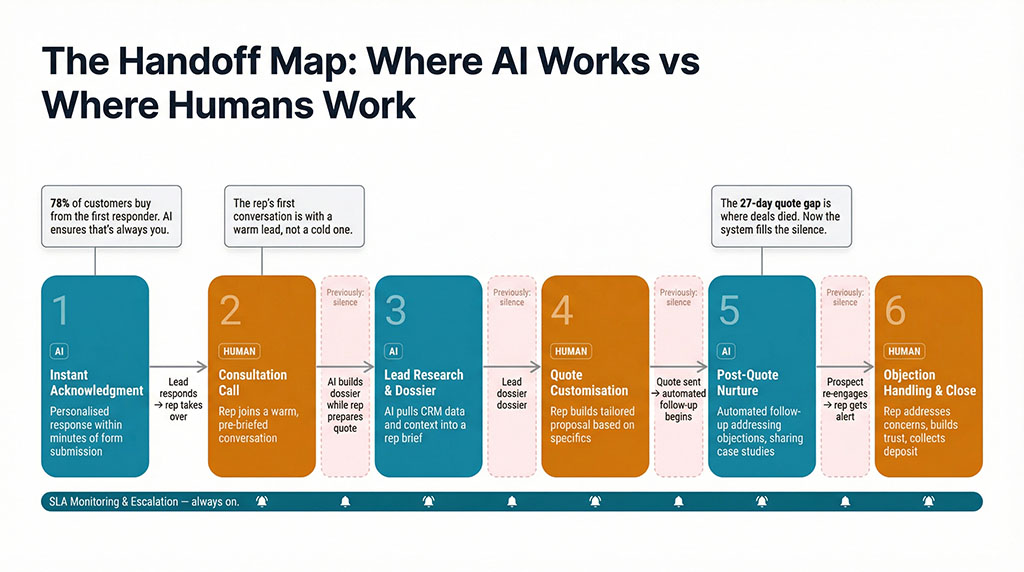

Our approach with this client follows a specific sequence, and the order matters. Step one is reducing the load on the existing salesperson. Step two—if needed after that—is bringing on new staff.

Here’s why that order is non-negotiable: if you hire into a broken process, you get two people doing broken work instead of one. The Bain report calls this “layering AI onto mediocre processes”—it just accelerates mediocre outcomes. Fix the machine first.

The first four hours, handled. The most immediate fix is deploying an AI agent—in this case, HubSpot’s Breeze Prospecting Agent—to own the 0-to-4-hour response window. When a lead submits a form, the agent pulls their browsing history from the CRM (which pages they visited, how many times they looked at the pricing page, what shell sizes they explored), builds a context brief, and sends a personalised response. Not a generic “thanks for your enquiry” autoresponder. A message that says, “I noticed you’ve been looking at the 8-metre shells—here’s what most homeowners in your area need to know about council approval before ordering.”

The human rep gets looped in once the lead responds or books a meeting. Their first interaction is with a warm, acknowledged prospect who already feels heard—not a cold lead wondering if anyone’s home.

The quote-to-deposit gap, bridged. The 27-day gap between quote and deposit is where the second automation layer sits. The moment a quote is published in HubSpot, a follow-up sequence kicks in—timed emails addressing the specific objections that surface at this stage (installation complexity, seasonal timing, financing options). If the prospect views the quote three or more times without signing, the system fires a real-time alert to the rep: this person is ready to talk, call them now. If 48 hours pass with no action, an SMS reminder nudges them back.

This isn’t about removing the human. It’s about making sure the human shows up at the right moment with the right context, rather than spending their day manually checking who needs a follow-up.

SLA enforcement that actually works. The December blowout could have been caught at day two if an escalation system existed. The fix: a tiered alert structure. If a lead goes 15 minutes without assignment, the rep gets a Slack ping. At 4 hours, the sales manager gets notified. At 24 hours, an escalation hits a shared channel and the lead gets reassigned automatically. No lead sits in a queue hoping someone remembers.

Smart scoring to sort signal from noise. Not all 900 leads need the same effort. A prospect who visited the pricing page four times and used the pool size calculator scores differently from someone who clicked a single Facebook ad and bounced. Lead scoring in HubSpot lets the team focus their finite human attention on the prospects showing genuine buying behaviour—while the AI handles initial engagement for everyone else.

What this actually looks like when it works

Run the December numbers through the redesigned system and the maths shifts.

Those 223 leads that waited seven-plus days? Every one of them gets a personalised response within 15 minutes. Not from the salesperson—from an AI agent that’s read their browsing history and knows which product they care about. The rep’s phone doesn’t ring until there’s a qualified conversation waiting.

The 27-day quote-to-deposit stretch? It compresses, because the follow-up no longer depends on a single person remembering to check their pipeline on a Friday afternoon. The system nudges. The system reminds. The system alerts when a prospect is circling back for the third time.

And the salesperson? They stop spending their morning triaging a list of 90 unworked leads and start spending it on the five conversations most likely to close. That’s the shift Bain is describing when they talk about “freeing sellers for higher-value customer interactions.” It’s not abstract. It’s the difference between making 40 rushed calls and having five good ones.

The instinct when volume outpaces capacity is always to add people. Sometimes that’s right. But more often, the better first move is to strip out every task that doesn’t require human judgment and let the humans do what they’re actually good at—building trust, answering hard questions, and closing.

Two hundred and twenty-three hands went up in December. The system we’re building now makes sure every single one of them gets shaken.

See Where Your Leads Are Dying

We’ll map your current lead-to-close workflow against the AI-first model — and show you exactly where response gaps, stalled quotes, and silent follow-up windows are costing you deals.

Most clients find 2–3 stages where leads sit in silence long enough to go cold — and nobody noticed because the marketing dashboard looked fine. Takes 30 minutes.

You don’t need more leads. You need to stop losing the ones already raising their hand.