The Slack message arrived at 9:14 AM. A marketing coordinator—let’s call her Jess—had used Claude to rewrite a landing page overnight. Conversion rate jumped 22% by lunch. Her manager’s response wasn’t congratulations. It was: “We haven’t approved that tool. Please revert to the original copy.”

Jess didn’t revert. She stopped telling her manager.

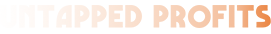

She’s not alone. Slack’s 2024 Workforce Index—17,372 workers across 15 countries—found 48% of employees won’t tell their manager they used AI on a task. KPMG and the University of Melbourne put it higher: 57% actively conceal it. And 45% have used AI tools their company explicitly banned.

Read that again. Nearly half your workforce is running a parallel operation. Producing faster work, better output, genuine competitive advantage—in secret. Not because the technology is dangerous. Because their managers made it feel that way.

This is the most expensive management failure of the decade. And the data behind it is worse than you think.

The Barrier Nobody Wants to Name

McKinsey’s January 2025 “Superagency” report surveyed 3,613 employees and 238 C-suite executives. The headline: only 1% of companies believe they’ve reached AI maturity. Despite 92% planning to increase investment.

The biggest operational barrier to scaling? Not cost. Not technical complexity. Not workforce readiness.

Leadership alignment.

Here’s where it gets uncomfortable. C-suite leaders are more than twice as likely to blame employee readiness as they are to blame their own leadership. But employees are three times more likely than leaders realise to believe AI could automate 30% of their work within a year. The workers are ready. The corner offices just haven’t noticed—because the workers learned to stop telling them.

BCG confirmed this across 1,000 CxOs in 59 countries: 74% of companies can’t scale AI value. The breakdown? Seventy percent of obstacles are people- and process-related. Not algorithms. Not infrastructure. People. Companies that succeed follow what BCG calls a 10/20/70 rule: 10% of resources on algorithms, 20% on technology, 70% on people and process change. Companies that fail invert the ratio—pouring money into tools while ignoring the humans who refuse to use them.

You’ve probably felt this in your own organisation. The tools are there. The budget got approved. Six months later, adoption is stuck at the same pilot group that volunteered on day one.

Gallup put a number on why. Employees whose manager actively supports AI use are nearly nine times more likely to say it helps them do their best work. Not twice. Not three times. Nine times. Manager attitude isn’t one factor among many. It’s the single largest predictor of whether AI adoption succeeds or stalls.

The question is: why would managers—the people paid to make the organisation better—be the ones stopping it?

Five Reasons Your Managers Are Blocking AI (And Why Every One Is Rational)

The resistance isn’t stupidity. That’s the part the AI cheerleaders get wrong. Every one of these barriers reflects a genuine structural threat to the middle management function. Understanding the psychology is the only path to dismantling it.

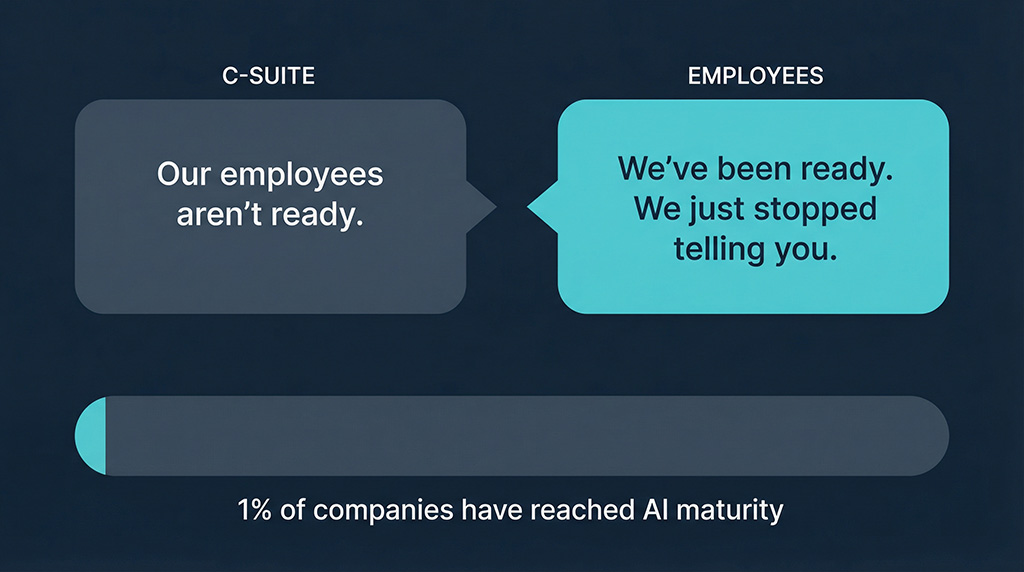

They’re afraid of becoming obsolete—because they should be. Gartner predicts that by 2026, 20% of organisations will use AI to eliminate more than half of their current middle management positions. Middle managers already made up a third of all layoffs in 2023, according to Bloomberg. The ratio of individual contributors to managers has nearly doubled—from 3:1 in 2019 to 6:1 in 2025. Harvard Business School research found AI coding tools increased coding output by 5% while reducing project management activity by 10%. That 10% is the coordination function. The job description itself, quietly decomposing.

Harvard’s Manuel Hoffmann put it plainly: “We will likely see an increase in agility in companies that use gen AI. This will lead to the flattening of corporate hierarchies.”

If your job is coordinating specialists and AI eliminates the need for that coordination, the math isn’t ambiguous. It’s existential.

Status quo bias is doing the heavy lifting. McKinsey names it directly: a “quiet force” where people overweight the risks of adoption and underweight the risks of doing nothing. MIT Media Lab found 95% of AI pilot programs fail—and the successful 5% trace back entirely to leadership commitment, not technology quality. The tools work. The organisational will doesn’t. And the people with the most to lose from change are the ones deciding whether change happens.

AI dissolves the gatekeeper function. When analytics, content generation, creative tools, and automated workflows are accessible to everyone, managers lose their role as the sole conduits of information and coordinators of specialist talent. McKinsey estimates AI management tools can reduce overhead by 15–40%. The evidence of employees already bypassing the gate is the hiding data we opened with—48%, 57%, 45%. That’s not a forecast. It’s a census of the revolution already underway.

The skills gap is self-reinforcing. Less than half of companies offer any leadership-specific AI training. Thirty-one percent offer none at all. Among leaders who manage other managers—the people expected to champion this transition—only 33% are frequent AI users. Two-thirds of the officers leading the charge barely touch the weapons. Deloitte’s John Hilborn nailed the dynamic: “Sometimes managers don’t fully understand AI themselves, which creates ‘lumpy leader support’ and sows greater uncertainty among teams.”

The trust deficit creates a doom loop. This is the most dangerous pattern in the data. Managers restrict AI because they don’t trust it. Employees hide usage because they don’t trust management’s reaction. Hidden usage creates ungoverned “shadow AI”—the exact governance risk managers feared. Deloitte’s TrustID Index found trust in company-provided generative AI fell 31% in just three months. Trust in agentic AI dropped 89%.

David De Cremer, writing in Harvard Business Review, identified the root: “Employees won’t trust AI if they don’t trust their leaders.”

The loop is self-reinforcing. Restriction breeds secrecy. Secrecy breeds risk. Risk justifies more restriction. And every rotation of the cycle pushes adoption further underground, where it’s ungoverned, unaudited, and invisible to the people who think they’re managing it.

"But We're Being Responsible"

This is the objection. And it deserves a serious answer—not a dismissal.

Governance matters. Brand safety matters. The trust data is genuinely alarming: an 89% collapse in trust for agentic AI signals real concerns about reliability, hallucination, and data exposure. Samsung, Verizon, and J.P. Morgan Chase didn’t ban ChatGPT because their middle managers are cowards. They banned it because early deployments created legitimate security risks.

Some of the caution is warranted.

But here’s what the caution-as-strategy crowd can’t explain: if restriction worked, shadow AI wouldn’t exist. If banning tools protected the company, 45% of workers wouldn’t be using banned tools anyway. The governance argument assumes that saying “no” actually prevents usage. Every piece of data from 2024–2025 proves it doesn’t.

Restriction doesn’t create safety. It creates invisibility. And invisible AI usage—unaudited, untrained, ungoverned—is orders of magnitude more dangerous than sanctioned usage with guardrails, policies, and oversight.

The managers framing resistance as responsibility are solving for the wrong variable. They’re optimising for control in an environment where control has already been lost. The question isn’t whether your employees will use AI. They already are. The only question is whether they’ll do it with your guidance or without it.

The Structure They're Defending Is Already Dead

Even if the management resistance were justified, the economic model it protects collapsed before AI entered the conversation.

Marketing budgets have flatlined at 7.7% of revenue—down from above 10% pre-pandemic. A company that spent $110 million on marketing in 2018 now operates on $77 million. Fifty-nine percent of CMOs report insufficient budget to execute their core strategy. In that environment, every coordination cost becomes a target.

And coordination costs are staggering. Asana data shows 53% of knowledge workers’ time goes to busywork—status updates, workflow management, cross-team alignment, the organisational friction of getting humans to agree on things humans should have agreed on weeks ago. The global cost of data silos runs approximately $3.1 trillion annually. Employees spend 12 hours every week searching for disconnected data across fragmented systems.

I wrote about this in the context of agency fragmentation—five vendors, five wins, one losing business. But the same coordination tax applies inside organisations. The distributed model—multiple specialist teams coordinated by management layers—was already expensive. The ANA reports 82% of members now have in-house agencies, up from 42% in 2008. That’s not a trend. That’s a structural migration away from the model middle management was built to operate.

What AI does is finish the job.

Marketing functions achieve a 2.8x productivity multiplier through AI—the highest of any enterprise function. BCG projects a 15–25% reduction in marketing headcount requirements. One marketer with the right AI stack can execute from strategy through creation through analysis in a single workflow. I profiled this in The $131 Blog Post That Killed the Marketing Specialist—the “full-stack marketer” who replaces a five-person relay race with one person and a Claude subscription. That piece was about the economics of execution. This piece is about what happens when the management layer above that marketer becomes the friction.

A process that once required a content writer, designer, media buyer, analyst, and a manager to orchestrate them all now requires one person who understands all five functions and an AI that can execute them. The conductor is still necessary. The middle management layer that used to hire and supervise the orchestra? That’s the role that’s dissolving.

Klarna proved this at enterprise scale. Their AI assistant handled 2.3 million conversations in its first month. Resolution time dropped from 11 minutes to under two. They cut third-party agency spend by 25%. Revenue per employee climbed 73% year over year. The critical detail: CEO Siemiatkowski mandated 90% daily AI use across the company. He didn’t wait for middle management to get comfortable. He went through them.

HubSpot moved email marketing from segment-based to AI intent-based personalisation—82% conversion rate increase, 30% higher open rates. Starbucks’ Deep Brew platform personalises offers for 27.6 million loyalty members, driving $2.5 billion in attributed revenue.

These aren’t pilot programs. They’re operating at scale. And they share one characteristic: leadership that pushed through the management layer instead of waiting for it to volunteer.

The Compounding Price of Every Quarter You Wait

The penalty for management-driven delay isn’t a one-time miss. It compounds.

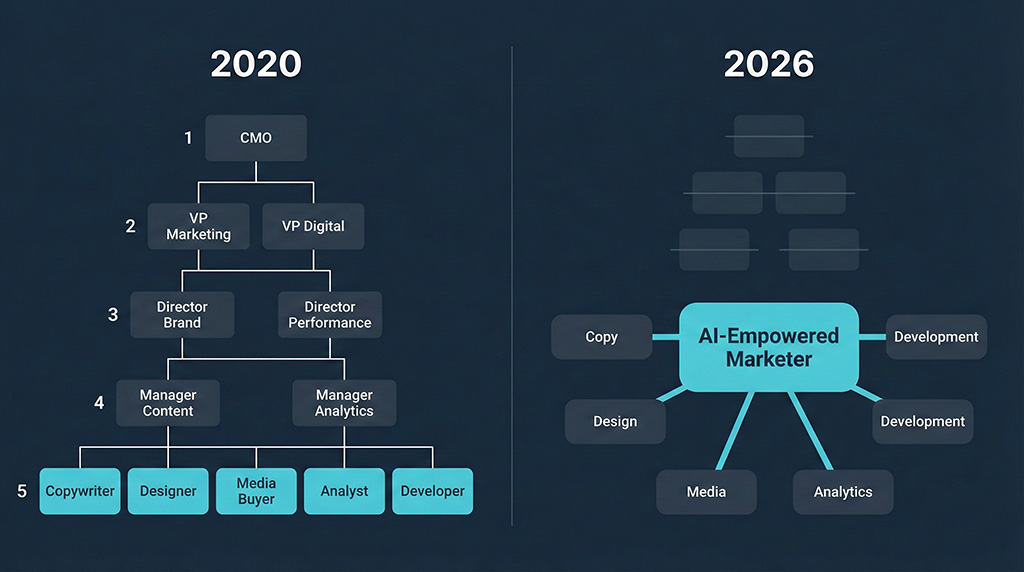

BCG’s September 2025 data shows the top 5% of AI-mature companies achieve 1.7x revenue growth, 3.6x total shareholder return, and 1.6x EBIT margin versus laggards. Sixty percent of organisations remain in the laggard category.

McKinsey projects AI front-runners could double their cash flow by 2030 while laggards see a 20% decline—a potential 120% gap. And it widens every quarter.

The talent economics are equally brutal. PwC’s 2025 AI Jobs Barometer shows AI-skilled workers command a 56% wage premium, up from 25% pre-generative AI. Every year you delay, the people you need get more expensive. Meanwhile, 59% of executives report actively looking for jobs at more AI-innovative companies. Delay doesn’t just cost revenue. It haemorrhages the talent capable of closing the gap.

IgniteTech CEO Eric Vaughan invested 20% of payroll in AI training, instituted mandatory “AI Mondays,” and faced widespread resistance from his management team. He ultimately replaced nearly 80% of staff within a year. The company finished 2024 at approximately 75% EBITDA margin.

His summary: “Changing minds was harder than adding skills.”

That sentence should be tattooed on the forehead of every change management consultant in the industry. The technology isn’t the hard part. The technology has never been the hard part. The hard part is the layer of the organisation whose identity is built on a world that no longer exists—and whose rational self-interest is to make sure nobody notices.

The Slack Message That Started Everything

Jess—the coordinator who rewrote the landing page and got told to revert—never did revert. She kept using AI. She just stopped telling anyone. Six months later, her campaigns were outperforming everything else in the department. Not by a little. By the kind of margin that makes people ask questions.

Her manager eventually noticed. Not the AI usage—the results. By then, Jess had built a workflow that made his approval process irrelevant. She didn’t need him to brief the copywriter because there was no copywriter. She didn’t need him to coordinate the designer because there was no designer. She didn’t need him to schedule the analyst review because the AI gave her the data in real time.

The manager’s role didn’t get automated. It got routed around.

That’s what’s happening right now, inside your organisation, whether your management layer acknowledges it or not. The 48% who are hiding their AI use aren’t waiting for permission. They’re building the future while their managers debate whether the future should be allowed.

The role of the manager doesn’t disappear. It transforms—from gatekeeper to enabler, from coordinator of specialists to architect of AI-human workflows, from the person who approves the brief to the person who ensures the AI-empowered team has strategic clarity, data access, and the trust to move at the speed the market demands.

But that transformation requires one thing the data says most managers haven’t provided.

Permission.

Your employees are ready for AI. They’ve been ready. The only thing in the way is the layer that’s supposed to be leading them there.

Book Your Agency Waste Audit

Find out if your management layer is the bottleneck—or the multiplier.

Most businesses discover that the real cost isn’t their tools—it’s the coordination tax between the people who approve things and the people who do things. Assessment takes 5 minutes.

Jess stopped waiting for permission. Your competitors did too.